Generative AI in Penetration Testing

Large Language Models and Generative AI, notably ChatGPT, have revolutionized numerous sectors, including the security industry. These advancements have significantly altered both offensive and defensive security strategies, making AI a valuable ally in pentest engagements by simplifying monotonous tasks and facilitating complex ones.

AI's Role in Assisting Penetration Testers

Being a penetration tester demands proficiency in various technical and non-technical areas, consuming substantial time and manpower. AI significantly streamlines pentest engagements, enhancing efficiency in the following ways:

Automated Pentest Report Writing

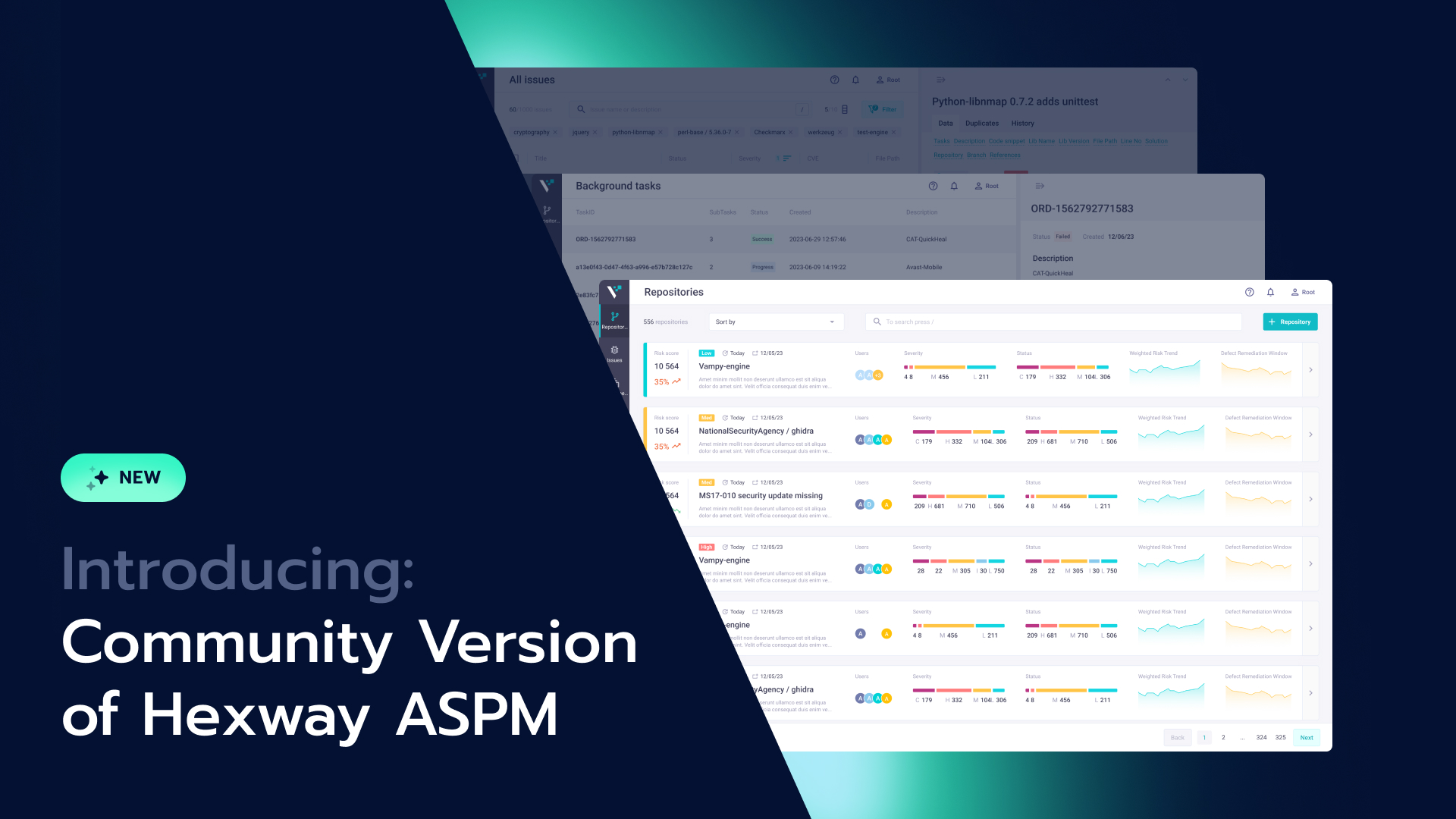

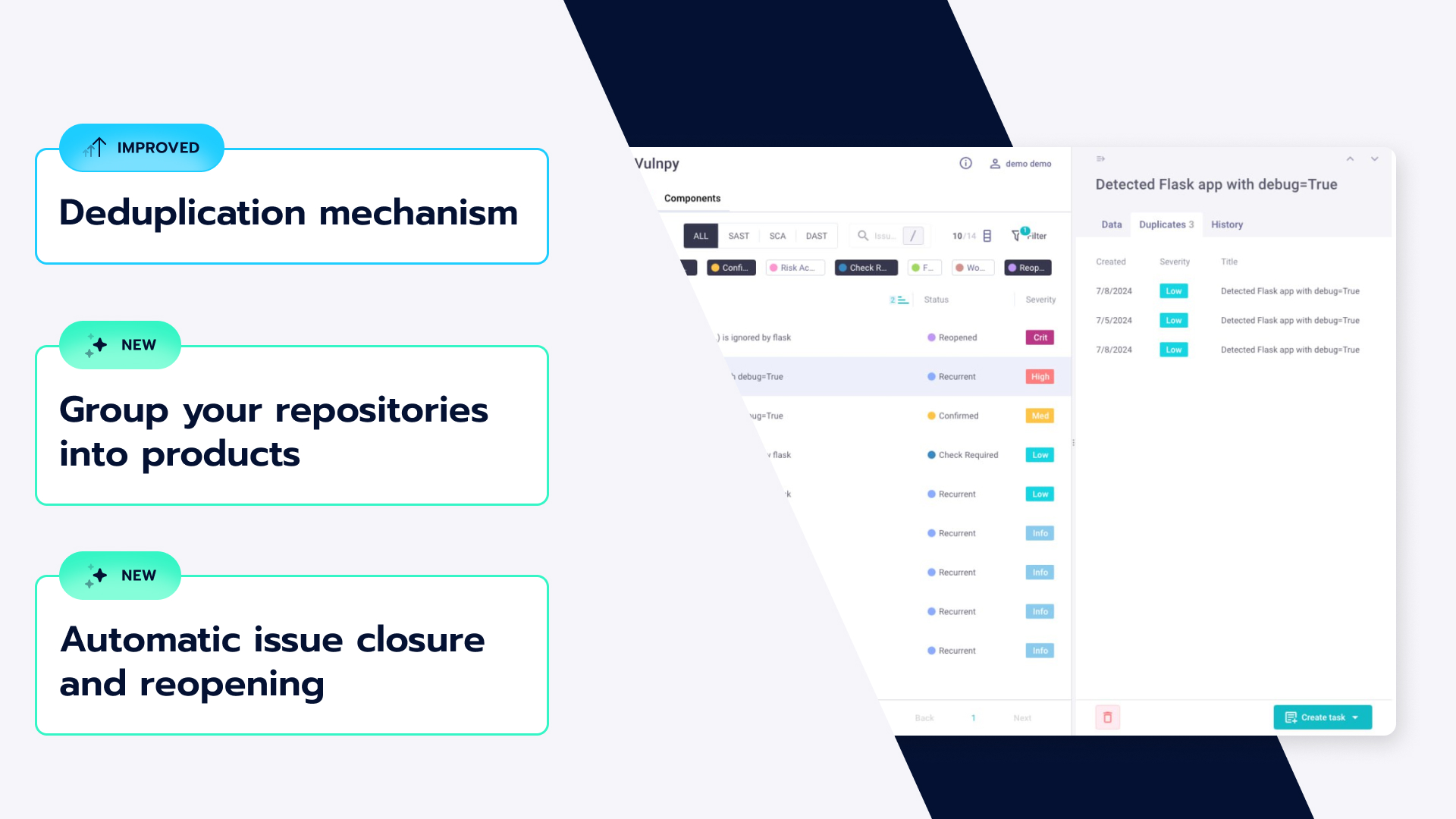

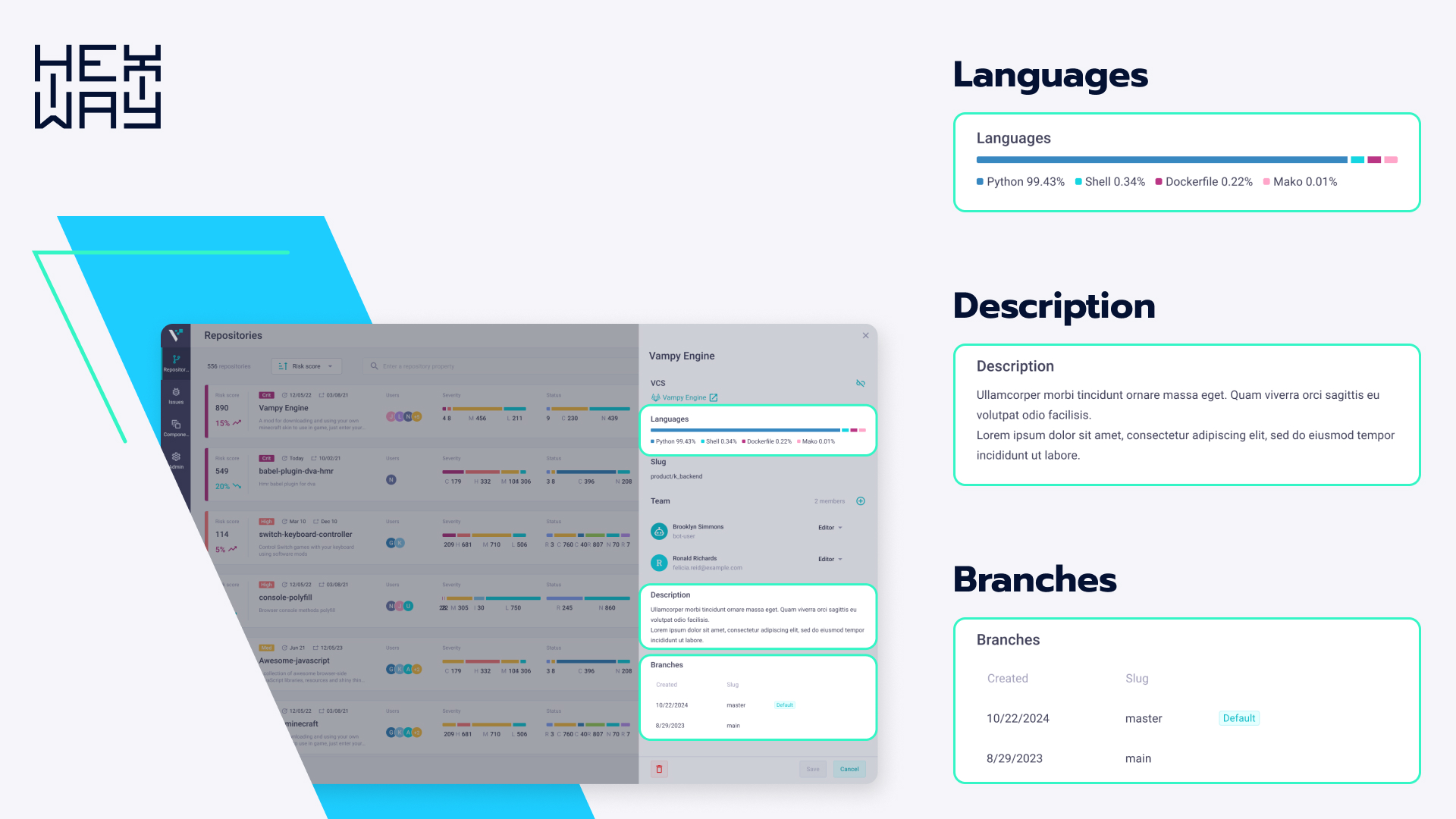

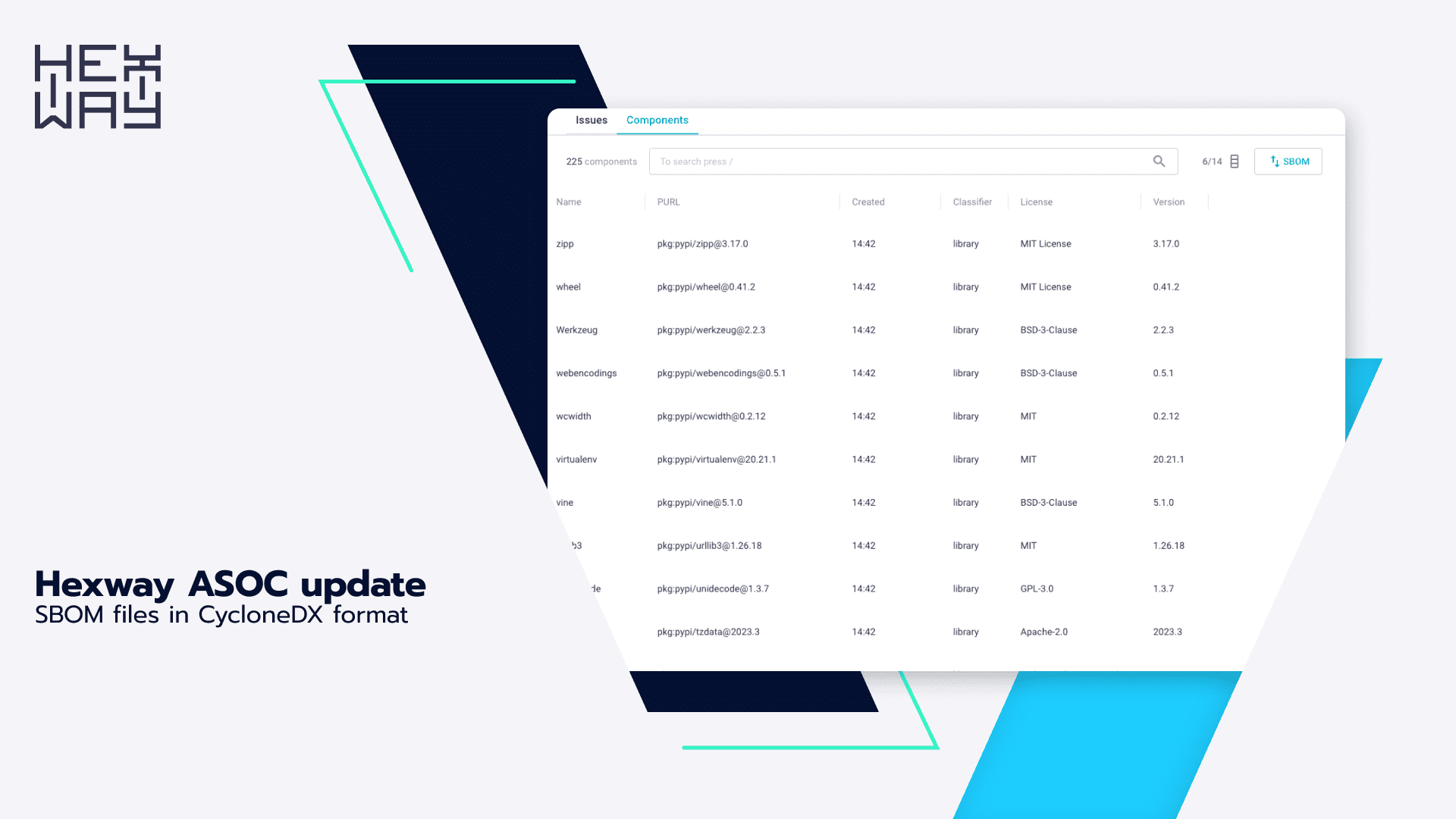

Report generation is often the least enjoyable part of any engagement, quickly becoming monotonous and tedious. Generative AI simplifies pentest report writing by crafting reader-friendly executive summaries, offering impact and mitigation recommendations, and much more. Many testers further optimize their workflow by training custom GPT language models on previous reports, ensuring consistency across AI-generated documents. Notably, Hexway Pentest Suite has integrated ChatCPT into their products, automating issue creation and making hack descriptions more customer-friendly.

Code Generation

Generative AI revolutionizes the scripting aspect of pentesting. It facilitates crafting scripts in any programming language, handling tasks from simple to moderately complex. For instance, during a recent CTF, ChatGPT assisted significantly in developing a Python script to decode an XOR-encoded password without the key.

Hexway Pentest Suite & ChatGPT integration

While using AI to generate stuff is great, using tools that leverage AI on your behalf takes it one step further. One such tool is PentestGPT, and as the name suggests, it’s a tool that uses GPT 4 from the pentest perspective. It can let you input a port scan and tell you the next steps: whether you should dirbust on port 80 or if the SSH version on port 22 is vulnerable to some known exploit. While a pentester might be aware of these things, it helps give an overview so that nothing is overlooked and no approach is left untried.

Another useful tool with AI powers for pentesters is Hive, a self-hosted pentest automation & reporting tool. With the latest update, Hive comes with ChatGPT integration. That means every issue description or recommendation in your report is now AI-assisted. Just input your Open AI API token in the configuration, and you are good to go: explain bugs, rephrase the impact they caused, and if you want to make a GPT request, you can do that too.

While these were some of the ways in which Generative AI can help pentesters, Artificial Intelligence, and Machine Learning when integrated into security lifecycle stages, the usage is limitless. Let’s take a look at how AI models (other than LLMs and Generative AI) can aid pentesters, blue teamers, and the overall security posture:

Behavioural Analytics

AI models can be specifically trained to analyze the behavior of incoming and outgoing traffic from your environment. In the initial phases, models can be taught what the “good data” looks like:

- the usual traffic

- expected network transactions

- known IPs and CIDRs, etc.

Once the model learns this, it will have a baseline to evaluate the future data. As soon as it sees something that is out of the ordinary or not expected as per the training on good data, it will raise a flag for whatever it thinks is suspicious. While not replacing human expertise, this can add a smart aspect to monitoring. Taking it a step further, things can be automated once the AI decides something is off, leading to faster response times. Though be warned there will be false positives, but then again, which protection system doesn't, right?

Adversary Simulation

To breach and test your existing AI-based defenses, you can create models to simulate an adversary. This provides two major benefits:

- You can set up periodic automatic attacks to learn how the infrastructure is built and then try to attack the weak points.

- This will lead to finding weaknesses in the existing AI security posture, which then can be used to improve the robustness and effectiveness in new ways.

Artificial Intelligence and Machine Learning are integrated into our daily lives in ways we sometimes don’t even realize. Whether it is Generative AI like ChatGPT or custom-trained models for medical research, AI is here to stay. And there is no reason for pentesters not to adopt it.

We saw how pentesters are leveraging this revolutionary technology, where some delegate tasks to it or use it to act as an aide in their objectives. Nonetheless, the end result is an increase in productivity. A recent MIT study found that ChatGPT boosts workers' productivity, so we even have scientific proof now! It’s time to embrace AI and make it your friend instead of treating it as your enemy. And surely it won’t take your jobs.